OpenSecret Technicals

With our newly released Maple AI and the open sourcing of our OpenSecret platform code, we present this technical primer on how we built our confidential compute developer platform leveraging secure enclaves.

Building Our Confidential Backend on Secure Enclaves

With our newly released private and confidential Maple AI and the open sourcing of our OpenSecret platform code, I'm excited to present this technical primer on how we built our confidential compute platform leveraging secure enclaves. By combining AWS Nitro enclaves with end-to-end encryption and reproducible builds, our platform gives developers and end users the confidence that user data is protected, even at runtime, and that the code operating on their data has not been tampered with.

Auth and Databases Today

As developers, we live in an era where protecting user data means "encryption at rest," plus some access policies and procedures. Developers typically run servers that:

- Need to register users (authentication).

- Collect and process user data in business-specific ways, often on the backend.

Even if data is encrypted at rest, it's commonly unlocked with a single master key or credentials the server holds. This means that data is visible during runtime to the application, system administrators, and potentially to the hosting providers. This scenario makes it difficult (or impossible) to guarantee that sensitive data isn't snooped on, memory-dumped, or used in unauthorized ways (for instance, training AI models behind the scenes).

"Just Trust Us" Isn't Good Enough

In a traditional server architecture, users have to take it on faith that the code handling their data is the same code the operator claims to be running. Behind the scenes, applications can be modified or augmented to forward private information elsewhere, and there is no transparent way for users to verify otherwise. This lack of proof is unsettling, especially for services that process or store highly confidential data.

Administrators, developers, or cloud providers with privileged access can inspect memory in plaintext, attach debuggers, or gain complete visibility into stored information. Hackers who compromise these privileged levels can directly access sensitive data. Even with strict policies or promises of good conduct, the reality is that technical capabilities and misconfigurations can override words on paper. If a server master key can decrypt your data or can be accessed by an insider with root permissions, then "just trust us" loses much of its credibility.

The rise of AI platforms amplifies this dilemma. User data, often full of personal details, gets funneled into large-scale models that might be training or fine-tuning behind the scenes. Relying on vague assurances that "we don't look at your data" is no longer enough to prevent legitimate concerns about privacy and misuse. Now more than ever, providing a strong, verifiable guarantee that data remains off-limits, even when actively processed, has become a non-negotiable requirement for trustworthy services.

Current Attempts at Securing Data

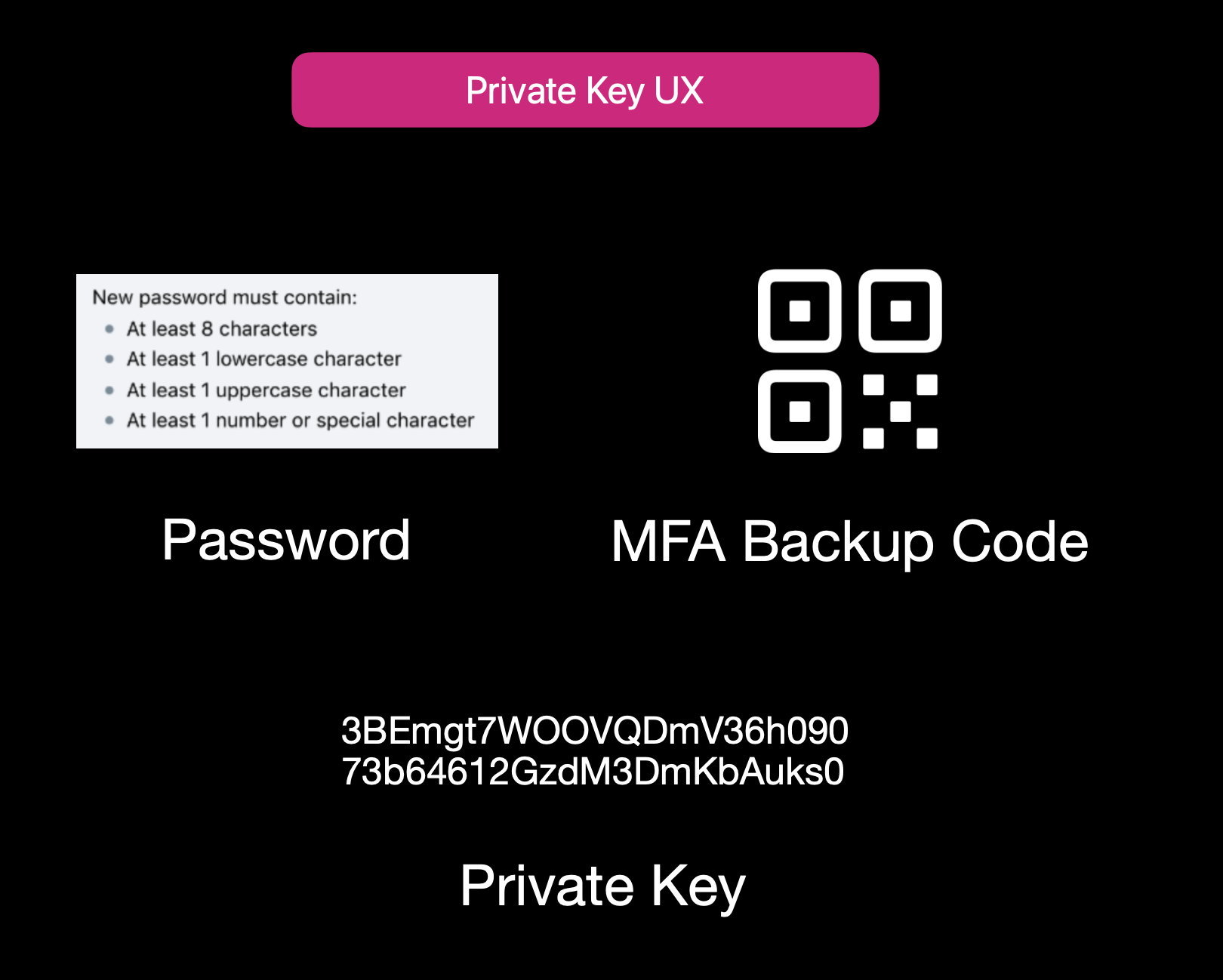

While properly securing data is not easy, it isn't to say that no one is trying. Some solutions use end-to-end encryption (E2EE), where user data is encrypted client-side with a password or passphrase, so not even the server operator can decrypt it. That approach can be quite secure, but it also has its limitations:

- Key Management Nightmares: If a user forgets their passphrase, the data is effectively lost, and there's no way to recover it from the developer's side.

- Feature Limitations: Complex server-side operations (like offline/background tasks, AI queries, real-time collaboration, or heavy computation) can't easily happen if the server is never capable of processing decrypted data.

- Platform Silos: Some solutions rely on iCloud, Google Drive, or local device storage. That can hamper multi-device usage or multi-OS compatibility.

Other approaches include self-hosting. However, these either burden users with dev ops overhead or revert to the "trust me" model for the server if you "self-host" on a cloud provider.

Secure Enclaves

The Hybrid Approach

Secure enclaves offer a compelling middle ground. They combine the privacy benefits of keeping data secure from prying admins while still allowing meaningful server-side computation. In a nutshell, an enclave is a protected environment within a machine, isolated at the hardware level, so that even if the OS or server is compromised, the data and code inside the enclave remain hidden.

High-Level Goal of Enclaves

Enclaves, also known under the broader umbrella of confidential computing, aim to:

• Lock down data so that only authorized code within the enclave can process the original plaintext data.

• Deny external inspection by memory dumping, attaching a debugger, or intercepting plaintext network traffic.

• Prove to external users or services that an enclave is running unmodified, approved code (this is where remote attestation comes in).

Different Secure Enclave Solutions

AMD SEV (Secure Encrypted Virtualization) encrypts an entire virtual machine's memory so that even a compromised hypervisor cannot inspect or modify guest data. Its core concept is "lift-and-shift" security. No application refactoring is required because hardware-based encryption automatically protects the OS and all VM applications. Later enhancements (SEV-ES and SEV-SNP) added encryption of CPU register states and memory integrity protections, further limiting hypervisor tampering. This broad coverage means the guest OS is included in the trusted boundary. AMD SEV has matured into a robust solution for confidential VMs in multi-tenant clouds.

Intel TDX (Trust Domain Extensions) shifts from process-level enclaves to full VM encryption, allowing an entire guest operating system and its applications to run in an isolated "trust domain." Like AMD SEV, Intel TDX encrypts and protects all memory the VM uses from hypervisors or other privileged software, so developers do not need to refactor their code to benefit from hardware-based confidentiality. This broader scope addresses many SGX limitations, such as strict memory bounds and the need to split out enclave-specific logic, and offers a more straightforward "lift-and-shift" path for running existing workloads privately. While SGX is now deprecated, TDX carries forward the core confidential computing principles but applies them at the virtual machine level for more substantial isolation, easier deployment, and the ability to scale up to large, memory-intensive applications.

Apple Secure Enclave and Private Compute is a dedicated security coprocessor embedded in most Apple devices (iPhones, iPads, Macs) and now extended to Apple's server-side AI infrastructure. It runs its own microkernel, has hardware-protected memory, and securely manages operations such as biometric authentication, key storage, and cryptographic tasks. Apple's "Private Compute" approach in the cloud brings similar enclave capabilities to server-based AI, enabling on-device-grade privacy even when requests are processed in Apple's data centers.

AWS Nitro Enclaves carve out a tightly isolated "mini-VM" from a parent EC2 instance, with its own vCPUs and memory guarded by dedicated Nitro cards. The enclave has no persistent storage and no external network access, significantly reducing the attack surface. Communication with the parent instance occurs over a secure local channel (vsock), and AWS offers hardware-based attestation so that secrets (e.g., encryption keys from AWS KMS) can be accessed only to the correct enclave. This design helps developers protect sensitive data or code even if the main EC2 instance's OS is compromised.

NVIDIA GPU TEEs (Hopper H100 and Blackwell) extend confidential computing to accelerated workloads by encrypting data in GPU memory and ensuring that even a privileged host cannot view or tamper with it. Data moving between CPU and GPU is encrypted in transit, so sensitive model weights or inputs remain protected during AI training or inference. NVIDIA's hardware and drivers handle secure data paths under the hood, allowing confidential large language model (LLM) workloads and other GPU-accelerated computations to run with minimal performance overhead and strong security guarantees.

Key Benefits

One major advantage of enclaves is their ability to keep memory completely off-limits to outside prying eyes. Even administrators who can normally inspect processes at will are blocked from peeking into the enclave's protected memory space. The enclave model is a huge shift in the security model: it prevents casual inspection and defends against sophisticated memory dumping techniques that might otherwise leak secrets or sensitive data.

Another key benefit centers on cryptographic keys that are never exposed outside the enclave. Only verified code running inside the enclave environment can run decryption or signing operations, and it can only do so while that specific code is running. This ensures that compromised hosts or rogue processes, even those with high-level privileges, are unable to intercept or misuse the keys because the keys remain strictly within the trusted boundary of the hardware.

Enclaves can also offer the power of remote attestation, allowing external clients or systems to confirm that they're speaking to an authentic, untampered enclave. By validating the hardware's integrity measurements and enclave-specific proofs, the remote party can be confident in the underlying security properties, an important guarantee in multi-tenant environments or whenever trust boundaries extend across different organizations and networks.

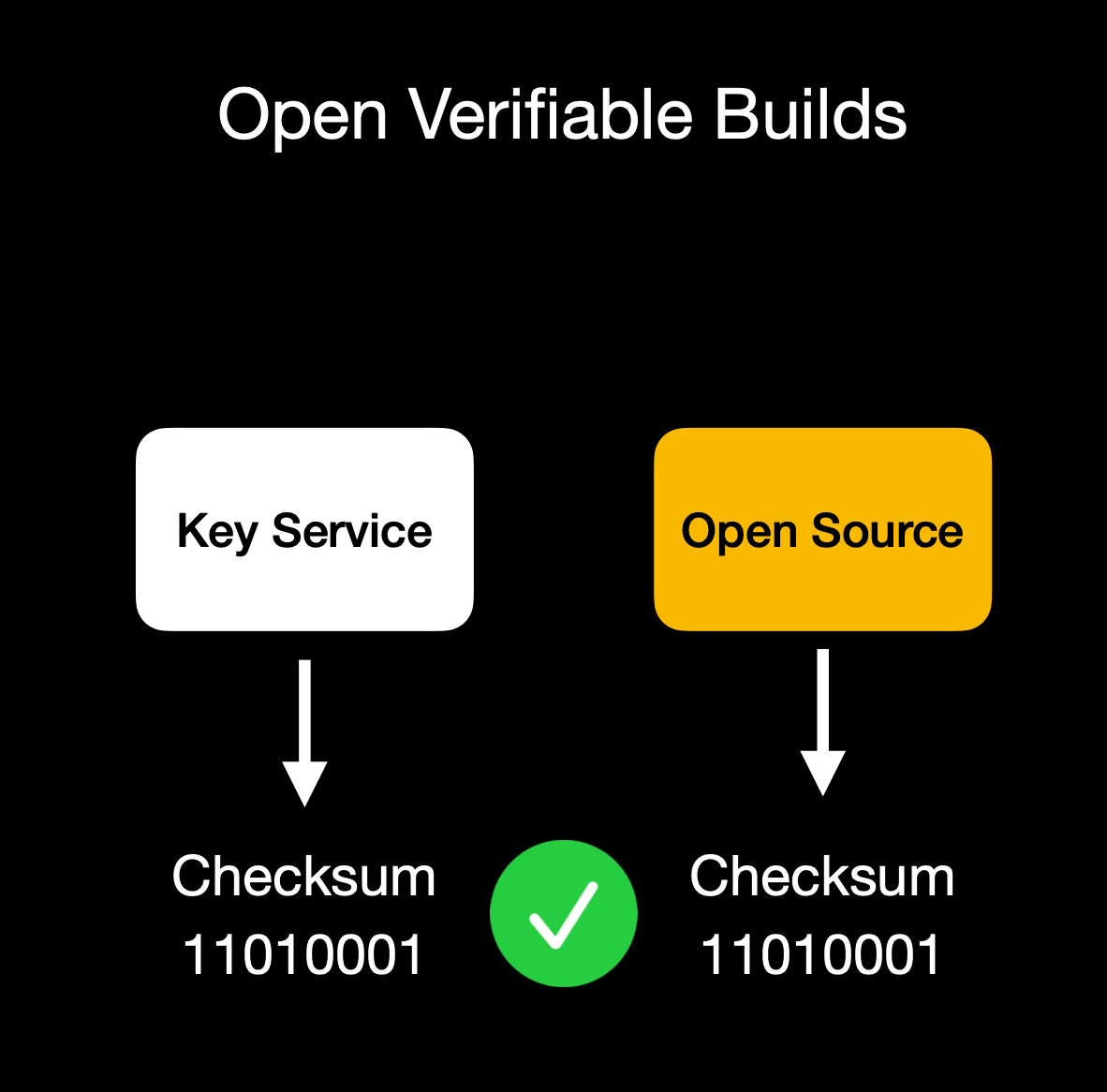

Beyond that, reproducible builds can create a verifiable fingerprint proving which binary runs in the enclave. This is a step above a simple "trust us" approach. Anyone can independently recreate the enclave image and verify the resulting cryptographic hash by using a reproducible build system (for example, our NixOS-based solution). If it matches, then users and developers know precisely how code handles their data, boosting confidence that no hidden changes exist.

It's worth noting that although enclaves shield you from software devs, cloud providers, and insider threats, you do have to trust the hardware vendor (Intel, AMD, Apple, AWS, or NVIDIA) to implement their microcode and firmware securely. The entire enclave model could be theoretically undermined if a CPU maker's root keys or manufacturing process were compromised. Fortunately, these companies undergo extensive audits and firmware validations (often with third-party researchers), and their remote attestation mechanisms allow you to confirm specific firmware versions before trusting an enclave. While this adds a layer of "vendor trust," it's still a far more contained risk than trusting an entire operating system or cloud stack, so enclaves remain a strong step forward in practical, confidential computing.

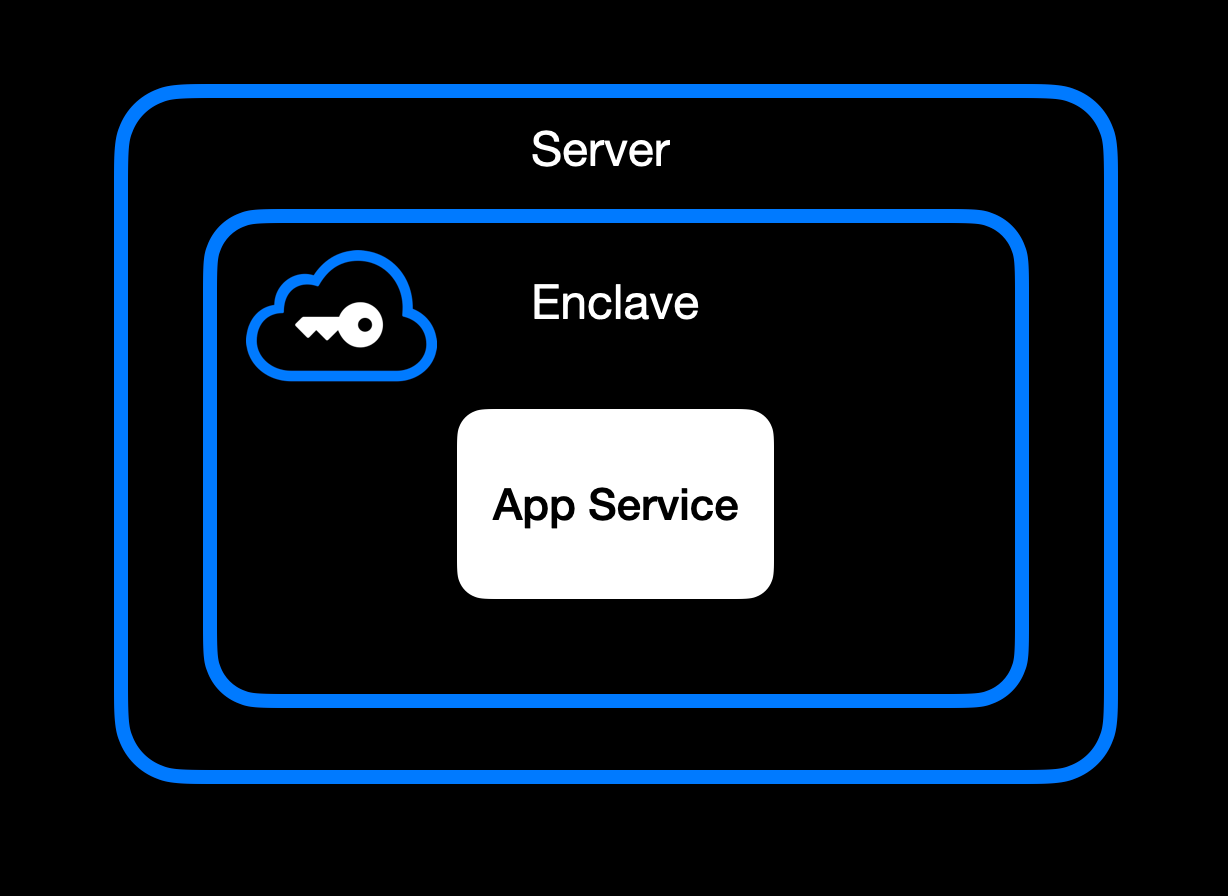

How We Use Secure Enclaves

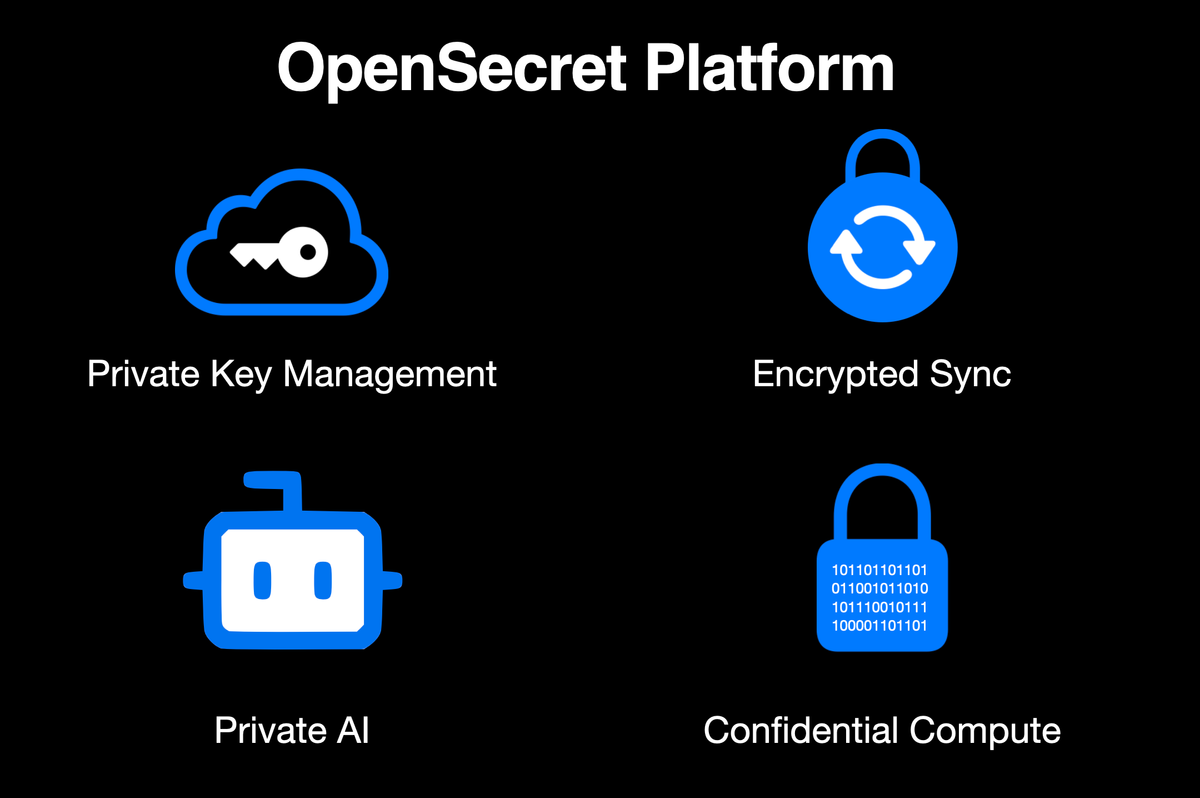

Now that we've covered the general idea of enclaves let's look at how we specifically implement them in OpenSecret, our developer platform for handling user auth, private keys, data encryption, and AI workloads.

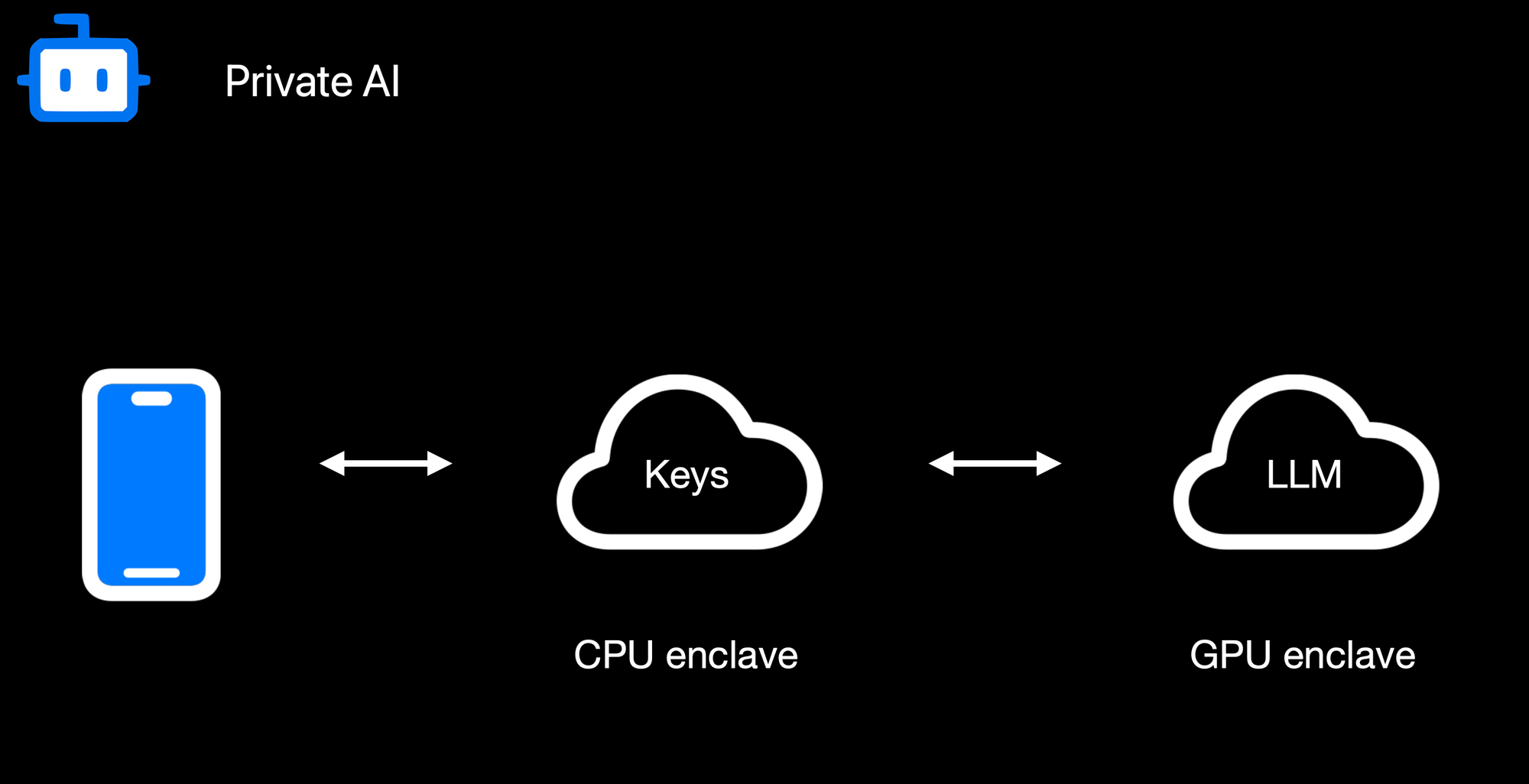

Our Stack: AWS Nitro + Nvidia TEE

• AWS Nitro Enclaves for the backend: All critical logic, authentication, private key management, and data encryption/decryption run inside an AWS Nitro Enclave.

• Nvidia Trusted Execution for AI: For large AI inference (such as the Llama 3.3 70B model), we utilize Nvidia's GPU-based TEEs to protect even GPU memory. This means users can feed sensitive data to the AI model without exposing it in plaintext to the GPU providers or us as the operator. Edgeless Systems is our Nvidia TEE provider, and due to the power of enclave verification, we don't need to worry about who runs the GPUs. We know requests can't be inspected or tampered with.

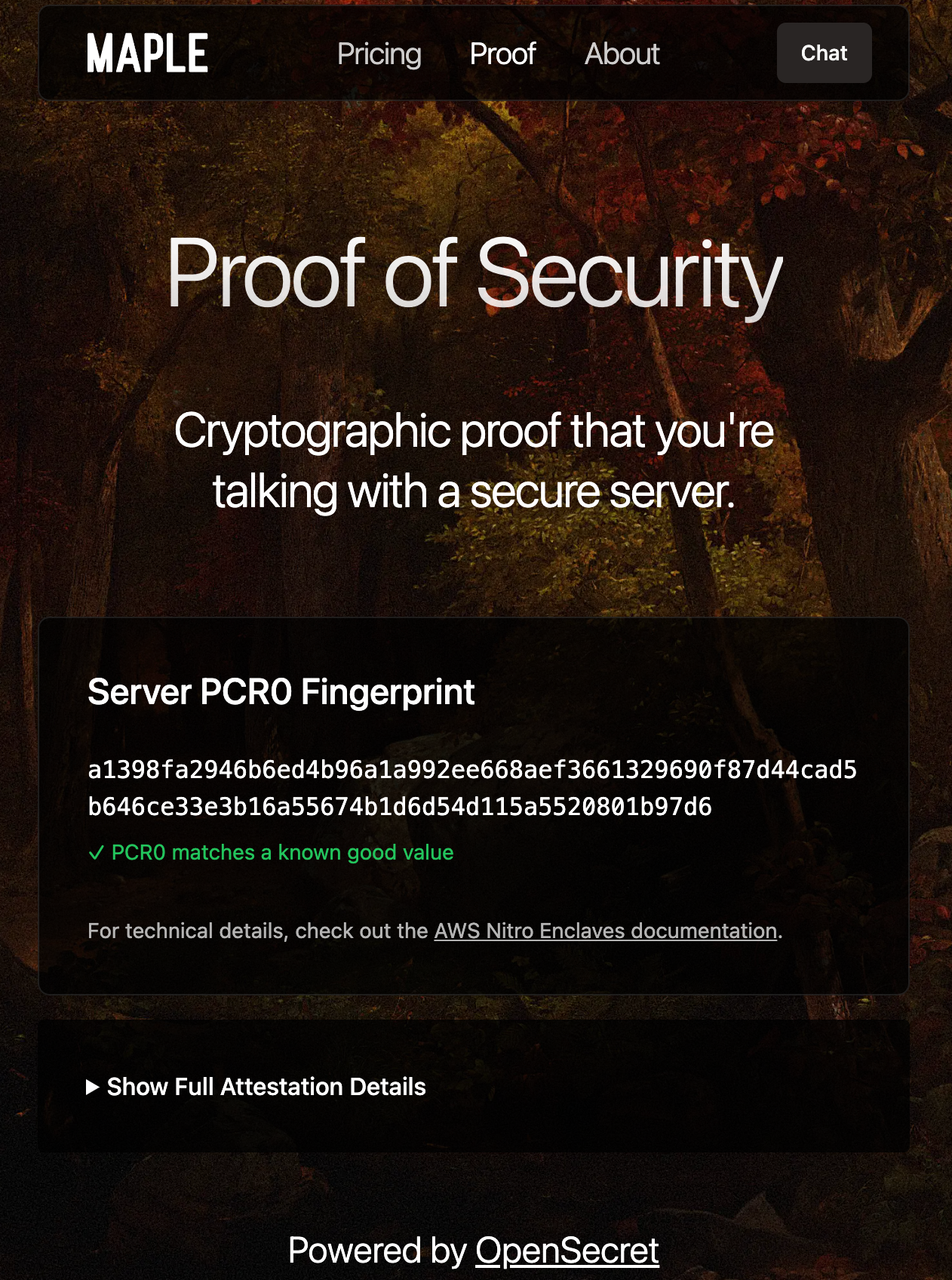

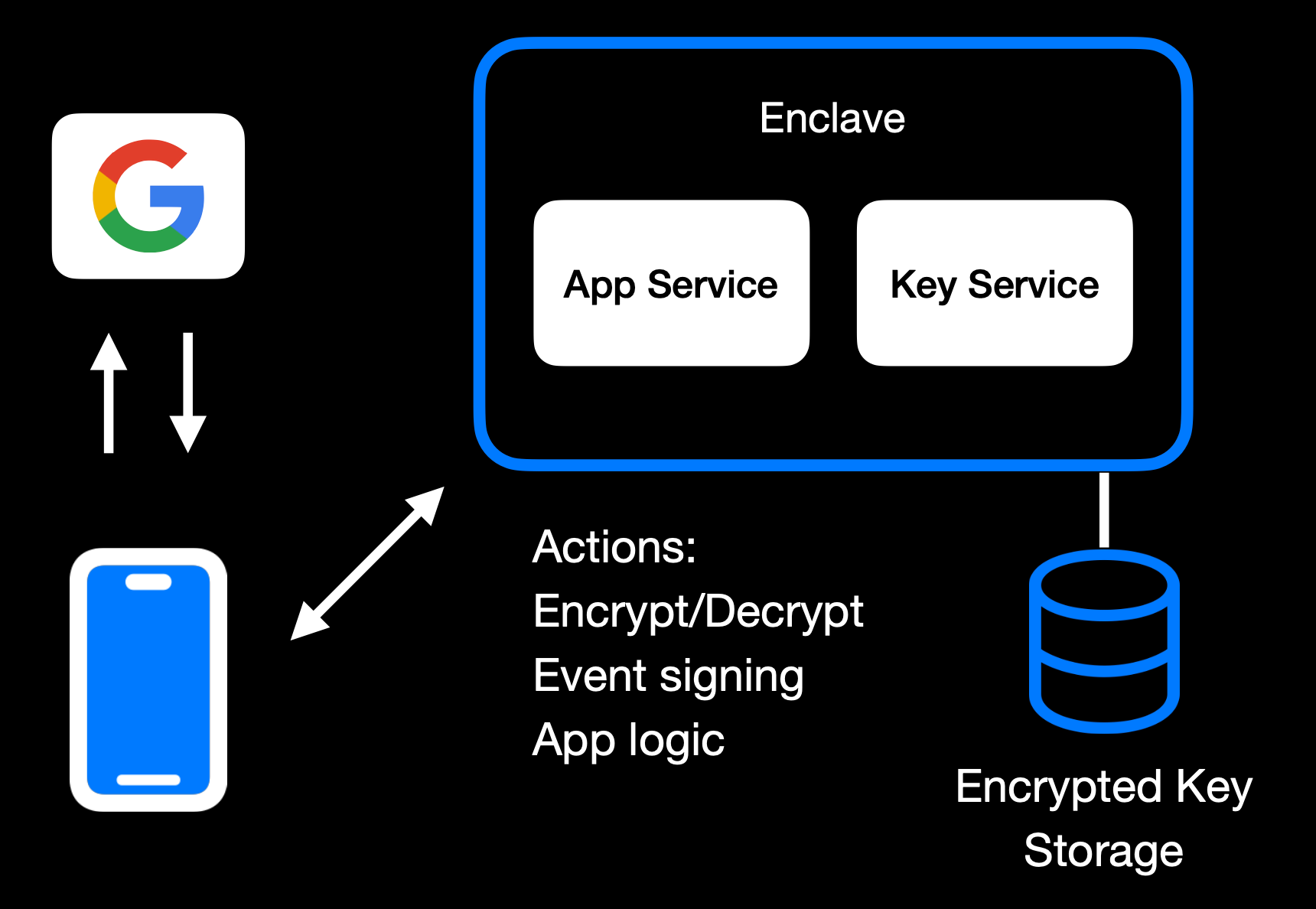

End-to-End Encryption from Client to Enclave

Before login or data upload, the user/client verifies the enclave attestation from our platform. This process proves that the specific Nitro Enclave is genuine and runs the exact code we've published. You can check this out live on Maple AI's attestation page.

Based on the attestation, the client establishes a secure ephemeral communication channel that only that enclave can decrypt. While we take advantage of SSL, it is typically not terminated inside the enclave itself. To ensure there's full encrypted data transfer all the way through to the enclave, we establish this additional handshake based on the attestation document that is used for all API requests during the client session.

From there, the user's credentials, private keys, and data pass through this secure channel directly into the enclave, where they are decrypted and processed according to the user's request.

In-Enclave Operations

At the core of OpenSecret's approach is the conviction that security-critical tasks must happen inside the enclave, where even administrative privileges or hypervisor-level compromise cannot expose plaintext data. This encompasses everything from when a user logs in to creating and managing sensitive cryptographic keys. By confining these operations to a protected hardware boundary, developers can focus on building their applications without worrying about accidental data leaks, insider threats, or malicious attempts to harvest credentials. The enclave becomes the ultimate gatekeeper: it controls how data flows and ensures that nothing escapes in plain form.

A primary example is user authentication. All sign-in workflows, including email/password, OAuth, and upcoming passkey-based methods, are handled entirely within the enclave. As soon as a user's credentials enter our platform through the encrypted channel, they are routed straight into the protected environment, bypassing the host's operating system or any potential snooping channels. From there, authentication and session details remain in the enclave, ensuring that privileged outsiders cannot intercept or modify them. By centralizing these identity flows within a sealed environment, developers can assure their users that no one outside the enclave (including the cloud provider or the app's own sysadmins) can peek at, tamper with, or access sensitive login information.

The same principle applies to private key management. Whether keys are created fresh in the enclave or securely transferred into it, they remain sealed away from the rest of the system. Operations like digital signing or content decryption happen only within the hardware boundary, so raw keys never appear in any log, file system, or memory space outside the enclave. Developers retain the functionality they need, such as verifying user actions, encrypting data, or enabling secure transactions without ever exposing keys to a broader (and more vulnerable) attack surface. User backup options exist as well, where the keys can be securely passed to the end user.

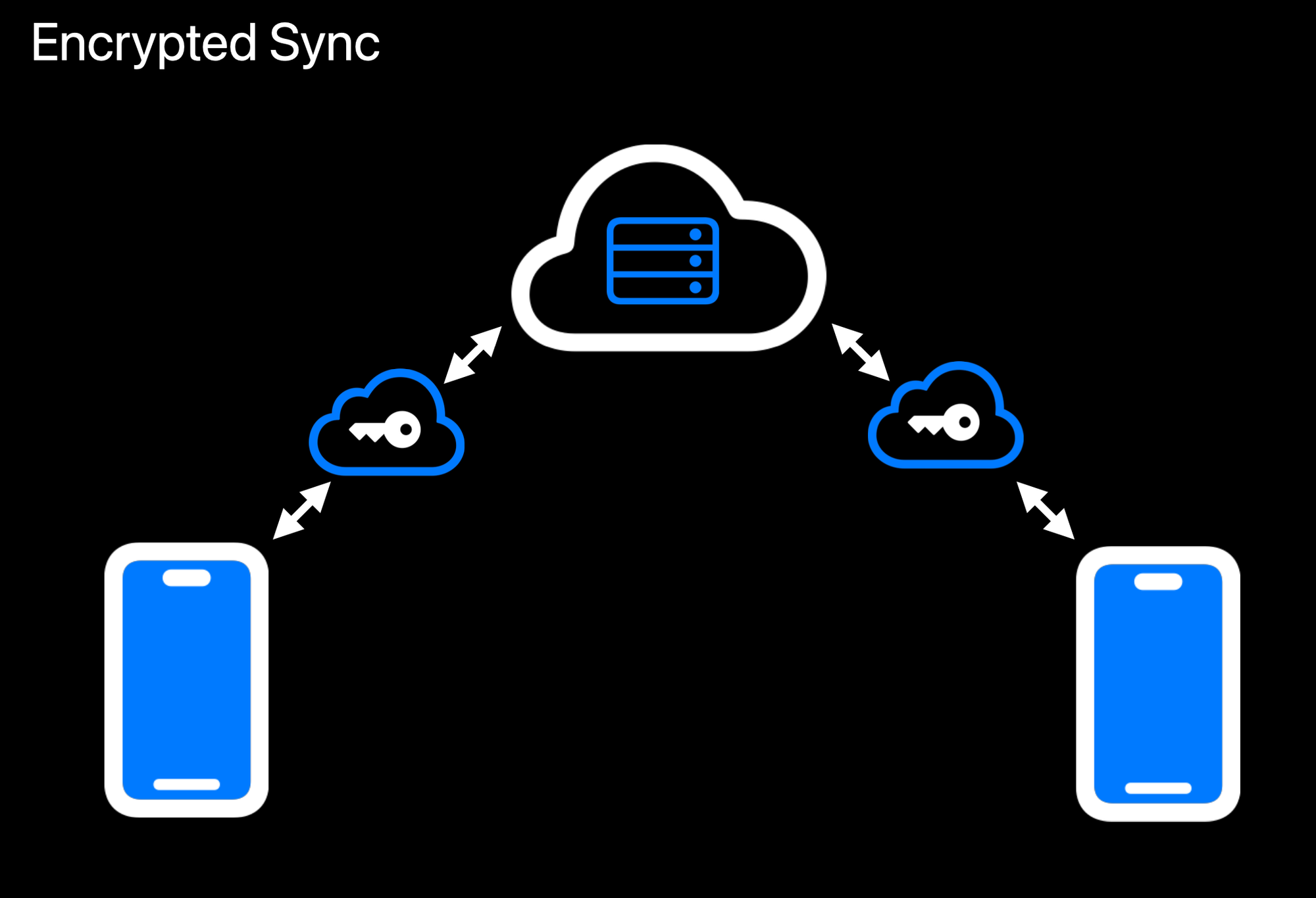

Another crucial aspect is data encryption at rest. While user data ultimately needs to be stored somewhere outside the enclave, the unencrypted form of that data only exists transiently inside the protected environment. Encryption and decryption routines run within the enclave, which holds the encryption keys strictly in memory under hardware guards. If a user uploads data, it is promptly secured before it leaves the enclave. When data is retrieved, it remains encrypted until it reenters the protected region and is passed back to the user through the secured communication channel. This ensures that even if someone gains access to the underlying storage or intercepts data in transit, they will see only meaningless ciphertext.

Finally, confidential AI workloads build upon this same pattern: the Nitro enclave re-encrypts data so it can be processed inside a GPU-based trusted execution environment (TEE) for inference or other advanced computations. Sensitive data, like user-generated text or private documents, never appears in the clear on the host or within GPU memory outside the TEE boundary. When an AI process finishes, only the results are returned to the enclave, which can then relay them securely to the requesting user. By seamlessly chaining enclaves together, from CPU-based Nitro Enclaves to GPU-accelerated TEEs, we can deliver robust, hardware-enforced privacy for virtually any type of server-side or AI-driven operation.

Reproducible Builds + Verification

We build our enclaves on NixOS with reproducible builds, ensuring that anyone can verify that the binary we publish is indeed the binary running in the enclave. This build process is essential for proving we haven't snuck in malicious code to exfiltrate data or collect sensitive logs.

Our code is fully open source (GitHub: OpenSecret), so you can audit or run it yourself. You can also verify that the cryptographic measurement the build process outputs matches the measurement reported by the enclave during attestation.

Putting It All Together

By weaving secure enclaves into every step, from authentication to data handling to AI inference, we shift the burden of trust away from human policies and onto provable, hardware-based protections. For app developers, you can offer your users robust privacy guarantees without rewriting all your business logic or building an entire security stack from scratch. Whether you're storing user credentials or running complex operations on sensitive data, the enclave approach ensures plaintext remains inaccessible to even the most privileged parties outside the enclave boundary. Developers can focus on building great apps, while OpenSecret handles the cryptographic "lock and key" behind the scenes.

This model provides a secure-by-design environment for industries that demand strict data confidentiality, such as healthcare, fintech, cryptocurrency apps for secure key management, or decentralized identity platforms. Instead of worrying about memory dumps or backend tampering, you can trust that once data enters the enclave, it's sealed off from unauthorized eyes, including from the app developers themselves. And these safeguards don't just apply to niche use cases. Even general-purpose applications that handle login flows and user-generated content stand to benefit, especially as regulatory scrutiny grows around data privacy and insider threats.

Imagine a telehealth startup using OpenSecret enclaves to protect patient information for remote consultations. Not only would patient data remain encrypted at rest, but any AI-driven analytics to assist with diagnoses could be run privately within the enclave, ensuring no one outside the hardware boundary can peek at sensitive health records. A fintech company could similarly isolate confidential financial transactions, preventing even privileged insiders from viewing or tampering with raw transaction details. These real-world implementations give developers a clear path to adopting enclaves for serious privacy and compliance needs without overhauling their infrastructure.

OpenSecret aims to be a full developer platform with end-to-end security from day one. By incorporating user authentication, data storage, and GPU-based confidential AI into a single service, we eliminate many of the traditional hurdles in adopting enclaves. No more juggling separate tools for cryptographic key management, compliance controls, and runtime privacy. Instead, you get a unified stack that keeps data encrypted in transit, at rest, and in use.

Our solution also caters to the exploding demand for AI applications: with TEE-enabled GPU workloads, you can securely process sensitive data for text inference without ever exposing raw plaintext or sensitive documents to the host system.

The result is a new generation of apps that deliver advanced functionality, like real-time encrypted data sync or AI-driven insights, while preserving user privacy and meeting strict regulatory requirements. You don't have to rely on empty "trust us" promises because hardware enclaves, remote attestation, and reproducible builds collectively guarantee the code is running untampered. In short, OpenSecret offers the building blocks needed to create truly confidential services and experiences, allowing you to innovate while ensuring data protection remains ironclad.

Things to Come

We're excited to build on our enclaved approach. Here's what's on our roadmap:

• Production Launch: We're using this in production now with Maple AI and have a developer preview playground up and running. We'll have the developer environment ready for production in a few months.

• Multi-Tenant Support: Our platform currently works for single tenants, but we're opening this up so developers can onboard without needing a dedicated instance.

• Self-Serve Frontend: A dev-friendly portal for provisioning apps, connecting OAuth or email providers, and managing users.

• External Key Signing Options: Integrations with custom hardware security modules (HSMs) or customer-ran key managers that can only process data upon verifying the enclave attestation.

• Confidential Computing as a Service: We'll expand our platform so that other developers can quickly create enclaves for specialized workloads without dealing with the complexities of Nitro or GPU TEEs.

• Additional SDKs: In addition to our JavaScript client-side SDK, we plan to launch official support for Rust, Python, Swift, Java, Go, and more.

• AI API Proxy with Attestation/Encryption: We already provide an easy way to access a Private AI through Maple AI, but we'd like to open this up more for existing tools and developers. We'll provide a proxy server that users can run on their local machines or servers that properly handle encryption to our OpenAI-compatible API.

Getting Started

Ready to see enclaves in action? Here's how to dive in:

1. Run OpenSecret: Check out our open-source repository at OpenSecret on GitHub. You can run your own enclaved environment or try it out locally with Docker.

2. Review Our SDK: Our JavaScript client SDK makes it easy to handle sign-ins, put/get encrypted data, sign with user private keys, etc. It handles attestation verification and encryption under the hood, making the API integration seamless.

3. Play with Maple AI: Try out Maple AI as an example of an AI app built directly on OpenSecret. Your queries are encrypted end to end, and the Llama model sees them only inside the TEE.

4. Developer Preview: Contact us if you want an invite to our early dev platform. We'll guide you through our SDK and give you access to the preview server. We'd love to build with you and incorporate your feedback as we develop this further.

Conclusion

By merging secure enclaves (AWS Nitro and Nvidia GPU TEEs), user authentication, private key management, and an end-to-end verifiable encrypted approach, OpenSecret provides a powerful platform where we protect user data during collection, storage, and processing. Whether it's for standard user management, handling private cryptographic keys, or powering AI inference, the technology ensures that no one, not even us or the cloud provider, can snoop on data in use.

We believe this is the future of trustworthy computing in the cloud. And it's all open source, so you don't have to just take our word for it: you can see and verify everything yourself.

Do you have questions, feedback, or a use case you'd like to test out? Come join us on GitHub, Discord, or email us for a developer preview. We can't wait to see what you build!

Thank you for reading, and welcome to the era of enclaved computing.